Azure Synapse Serverless vs Databricks SQL Analytics (as of August 2021)

Azure Data Lakehouse architecture combines the best elements of data lakes and data warehouses. Delta file format, combined with low cost storage, enables new ways of working with data pipelines and machine learning workloads.

Users can use Azure Synapse Dedicated Pools for data warehousing workloads, and Databricks for advanced analytics and ad-hoc data exploration.

However, I miss a clear view on what technology I should use to query my data stored in Azure Data Lake Gen 2. How to query parquet or delta files efficiently? How to run simple analytics? There are data virtualization product like Dremio; AWS has Lambda. Is there anything else that I can use in Azure?

In this article I would like to compare Azure Synapse Serverless and Databricks SQL Analytics as query engines on top of Azure Data Lake Gen 2 data.

This article is a vendor neutral attempt to compare Azure Synapse and Databricks when using open data formats. The approach taken uses TPC-DS analytics queries to test performance and available functionalities. However, this is not a thorough performance comparison. Underlying data, Azure Synapse Serverless and Databricks can be further tweaked to optimize query results.

Questions:

- Which is faster - Synapse Serverless or Databricks SQL endpoint?

- Is Delta equally supported across Synapse Serverless and Databricks SQL?

- When to use Synapse Serverless and when Databricks SQL?

- What is the performance with OPENROWSET AND EXTERNAL tables?

- What is the cost of using Synapse Serverless and Databricks SQL?

For sure there are hundreds of other questions related to SQL compatibility, caching, developer friendliness, cluster vs. endpoint, etc. For now, let’s limit the scope to the questions above.

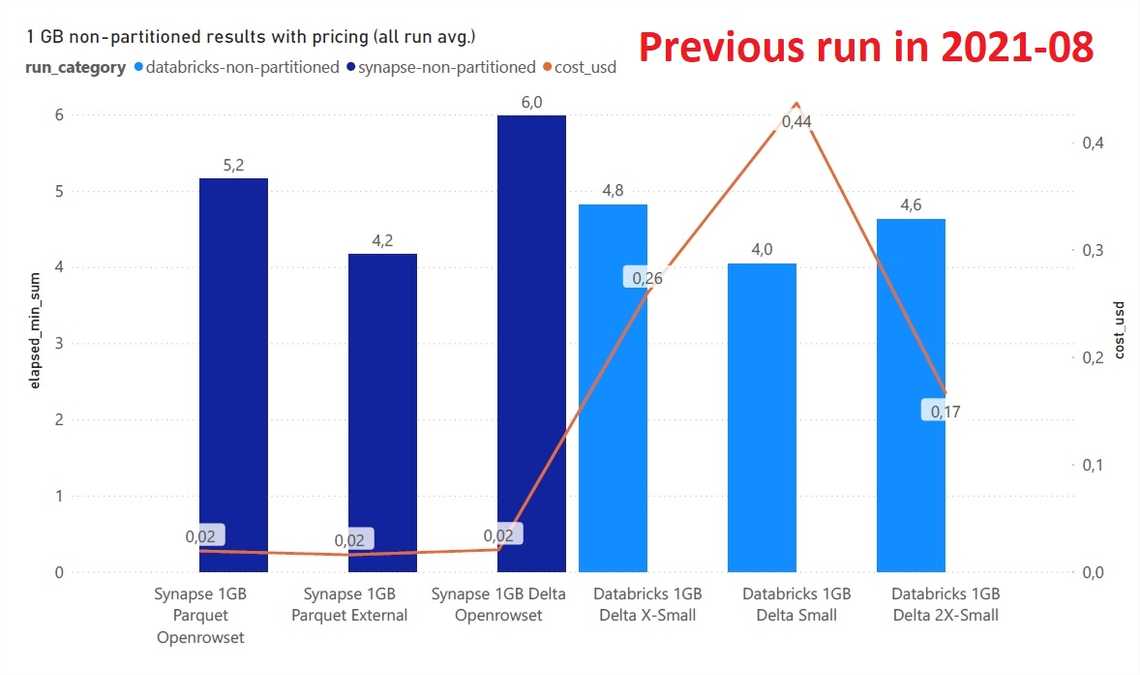

Changelog

2022-03-02 - Rerun tests as there were major upgrades on both platforms

- Upgrade Databricks pricing (from Ev3-series to E8ds_v4)

- Rerun tests for 1GB ONLY - observed ~30% Databricks improvement, while no major upgrade for Synapse

2021-08-05 - Extend tests

- Compare 10 GB partitioned and 10 GB non-partitioned

- Compare 1 TB partitioned and 1 TB non-partitioned

- Include parquet test runs for Databricks 10 GB and 1 TB runs

- Remove consumption entries for Synapse “warm-up” queries

2021-08-01 - Add dbt analysis model

- Publish collected log data on GitHub

- Define performance comparison model

- Aggregations per run, per dataset, with/without pricing

- Host dbt docs

2021-07-28 - Synapse run upgrades to have a fair comparison

- Use OPENROWSET for parquet and delta

- Explicitly define schema and use optimal data types

- Enforce partition usage with partitioned views

2021-07-20 - Initial release

Table Of Contents

Preparations

- Define game rules

- Deploy Azure resources

- Get data

- Register databases and tables

- Configure testing environment with JMeter

- Define cluster sizes and pricing

- Execution log analysis

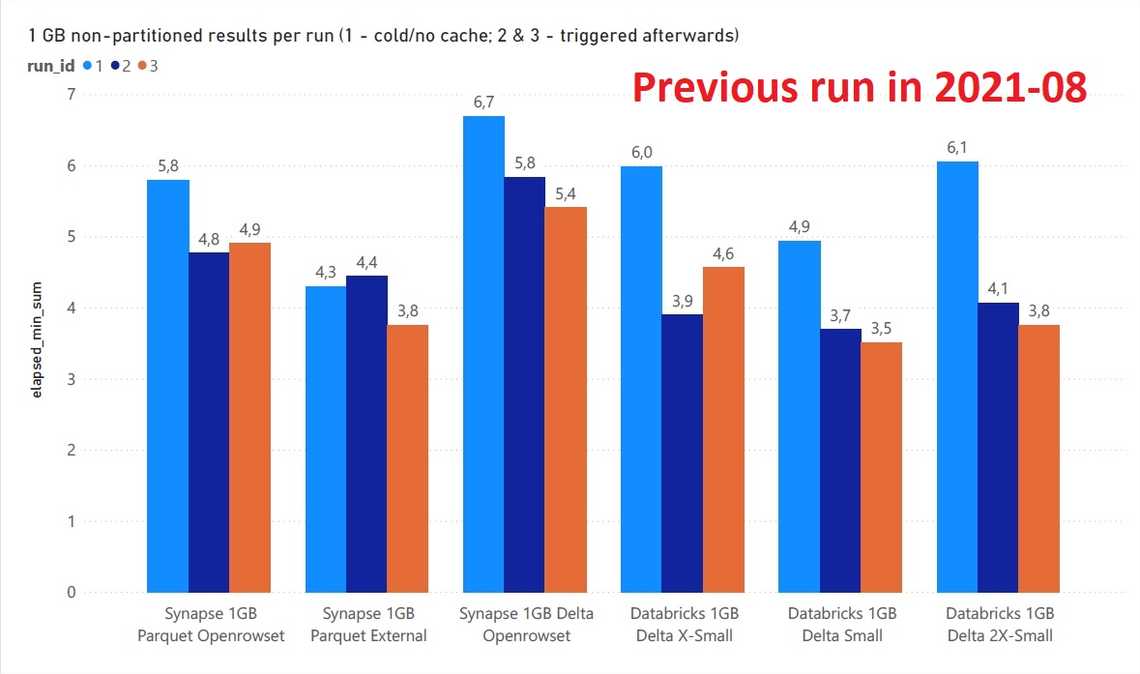

Round 1 - 1 GB non-partitioned

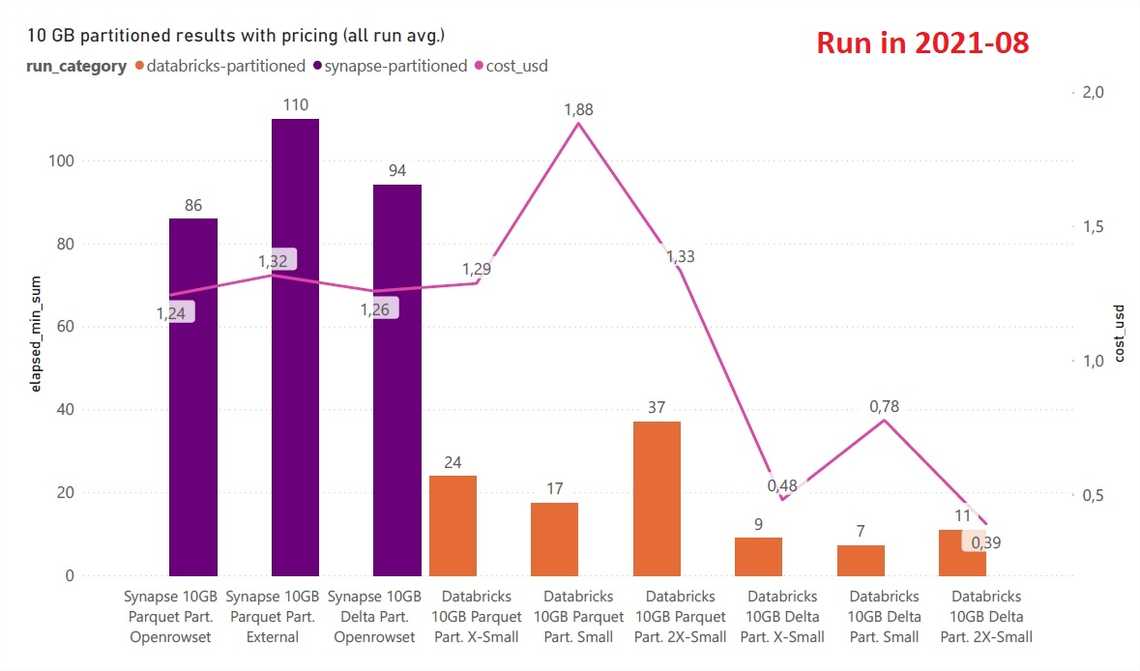

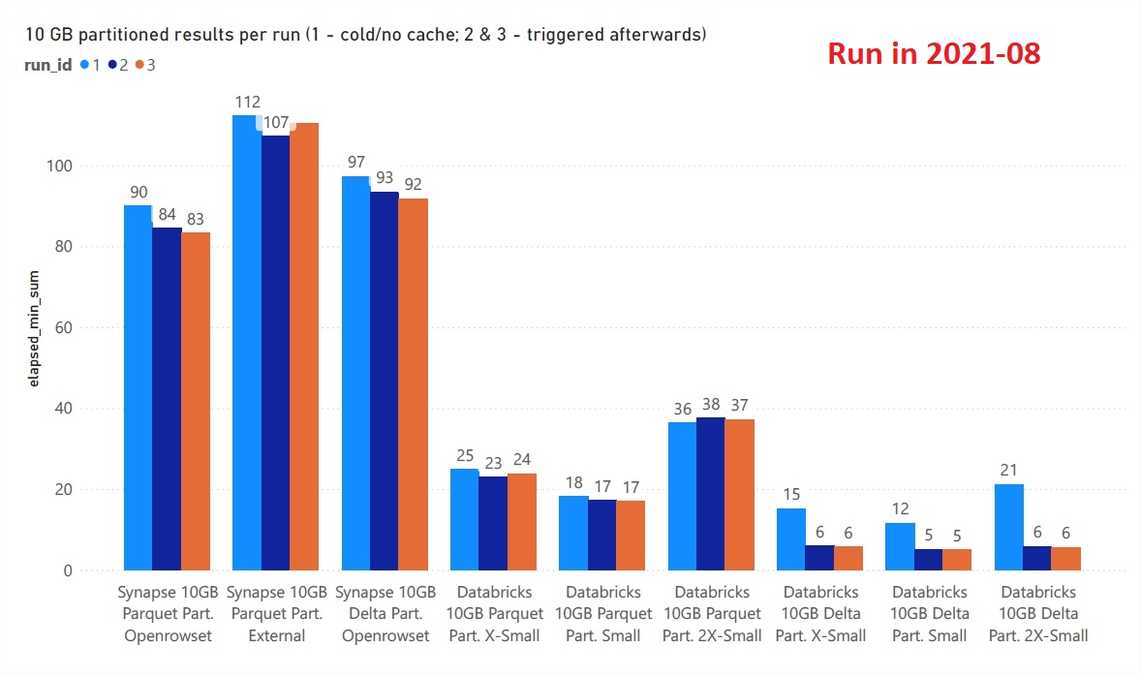

Round 2 - 10 GB partitioned

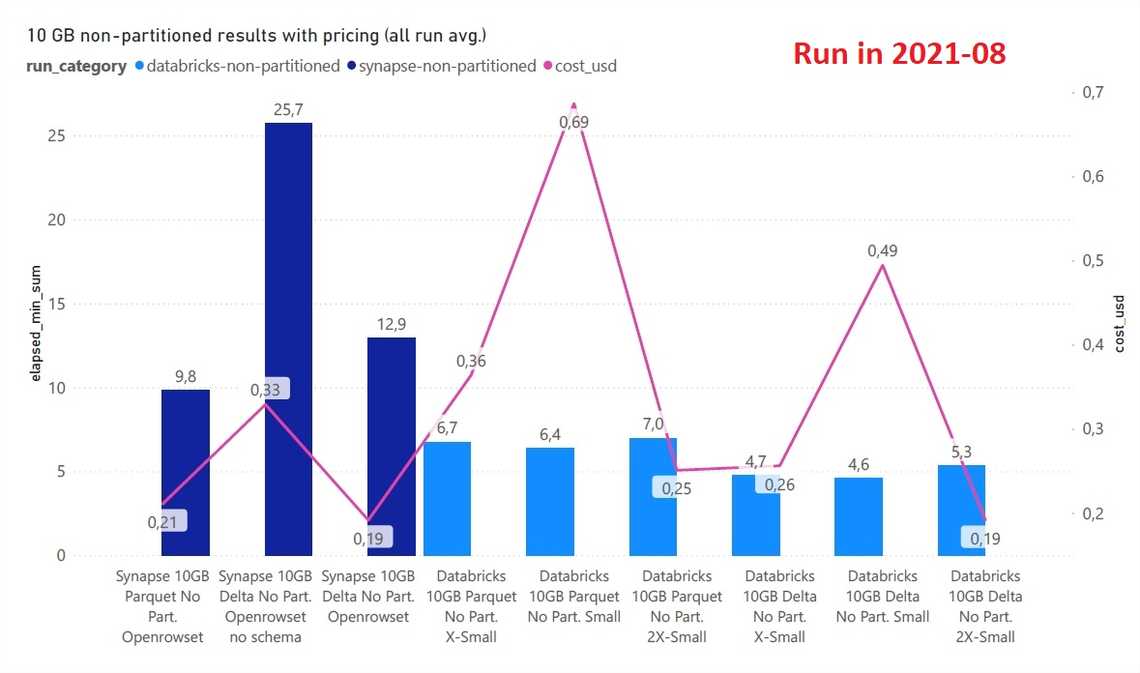

Round 3 - 10 GB non-partitioned

Round 4 - 1 TB partitioned

Round 5 - 1 TB non-partitioned

Final observations

Preparations

Define game rules

Use TPC-DS benchmark data to compare Synapse Serverless and Databricks SQL Compute performance and execution cost.

Choices:

- All data in data lake for both platforms (no preloading to SQL pools or dbfs)

- Both platforms run on the same data

- No concurrent queries

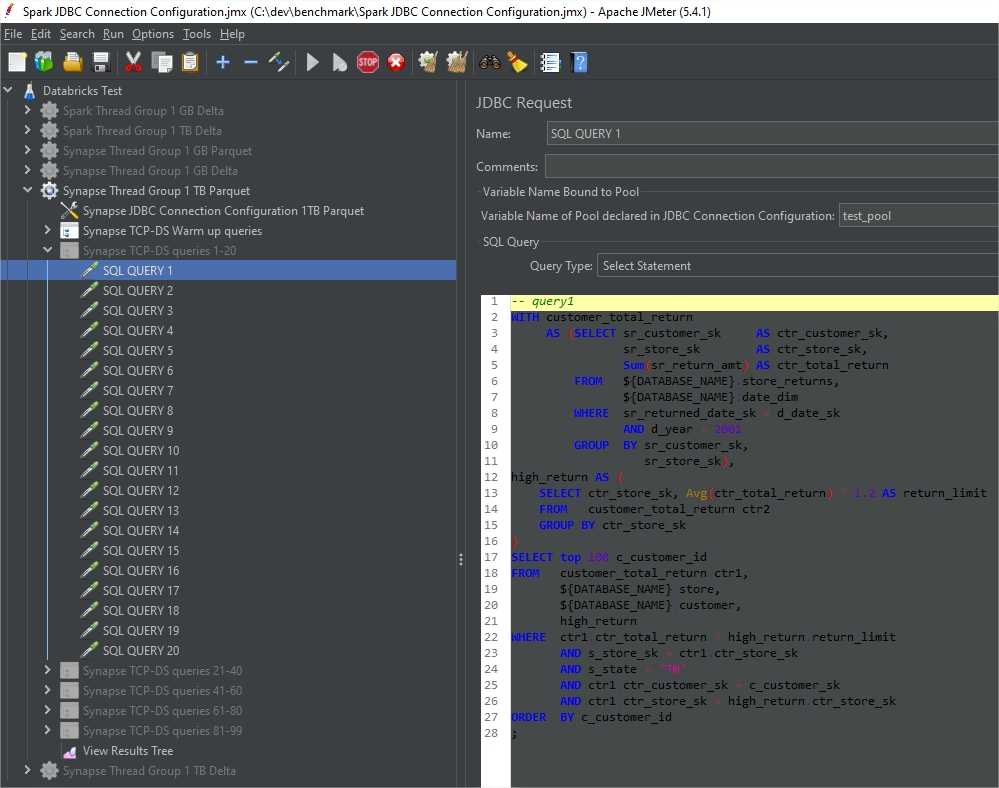

- 90 analytical queries with warm-up queries, 3 runs

- Use JMeter to run all queries

- High level cost estimates (Synapse provides per query pricing, while SQL Analytics has hourly pricing)

- Databricks caches data, while Synapse Serverless doesn’t have caching. Hence, include cached and non-cached results.

- Databricks SQL endpoints take up to 5 mins to start and lowest inactivity of 10 mins before cluster shut down. I’ve decided not to include it in pricing as I would have also include this time in duration, which didn’t make sense to me.

- User-friendliness (ease of creating objects, tables, UI)

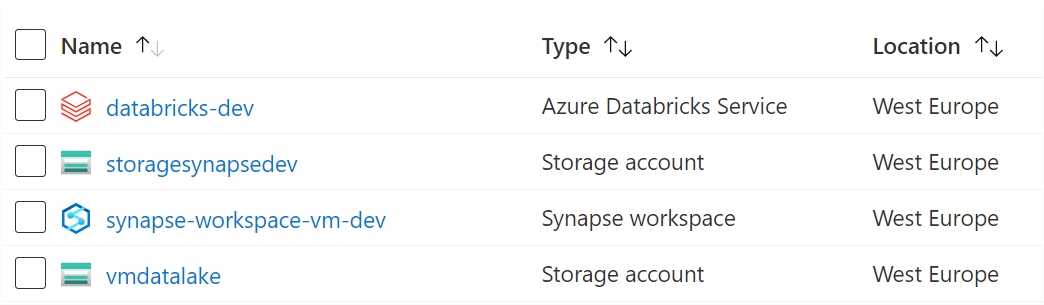

Deploy Azure resources

- Azure Synapse Workspace (serverless only)

- Databricks with enabled SQL Analytics

- Azure Data Lake Gen 2 storage

Get data

Getting data for testing is always a challenge, but luckily there are bright people who created datasets for such benchmarks.

Here are a few links ( link 1, link 2, link 3 ) that helped me to generate required data based on TCP-DS

I have three datasets: 1 GB, 10 GB and 1 TB:

| Table | Avg Row Size in Bytes | 1 GB row counts | 10 GB row counts | 1 TB row counts |

|---|---|---|---|---|

| call_center | 305 | 6 | 24 | 42 |

| catalog_page | 139 | 11,718 | 12,000 | 30,000 |

| catalog_returns | 166 | 143,672 | 1,439,882 | 144,004,725 |

| catalog_sales | 226 | 1,439,935 | 14,400,425 | 1,439,976,202 |

| customer | 132 | 100,000 | 500,000 | 12,000,000 |

| customer_address | 110 | 50,000 | 250,000 | 6,000,000 |

| customer_demographics | 42 | 1,920,800 | 1,920,800 | 1,920,800 |

| date_dim | 141 | 73,049 | 73,049 | 73,049 |

| household_demographics | 21 | 7,200 | 7,200 | 7,200 |

| income_band | 16 | 20 | 20 | 20 |

| inventory | 16 | 11,745,000 | 133,110,000 | 783,000,000 |

| item | 281 | 18,000 | 102,000 | 300,000 |

| promotions | 124 | 300 | 500 | 1,500 |

| reason | 38 | 35 | 45 | 65 |

| ship_mode | 56 | 20 | 20 | 20 |

| store | 263 | 12 | 102 | 1,002 |

| store_returns | 134 | 288,279 | 2,877,632 | 288,009,578 |

| store_sales | 164 | 2,879,789 | 28,800,501 | 2,879,966,589 |

| time_dim | 59 | 86,400 | 86,400 | 86,400 |

| warehouse | 117 | 5 | 10 | 20 |

| web_page | 96 | 60 | 200 | 3,000 |

| web_returns | 162 | 71,772 | 720,274 | 72,002,305 |

| web_sales | 226 | 718,931 | 7,200,088 | 719,959,864 |

| web_site | 292 | 30 | 42 | 54 |

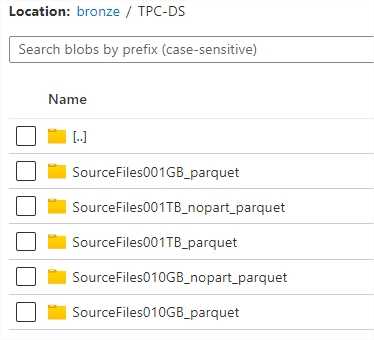

Azure Data Lake Gen 2 bronze zone stores originally generated data (1GB, 10 GB and 1TB datasets) in parquet format.

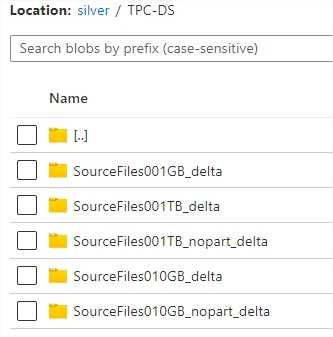

Silver zone is used to store optimized datasets, converted to delta format. Benchmark tests will run datasets in delta format.

Eventually, there are 5 data versions:

- 1 GB delta with no partitions

- 1 GB parquet with no partitions

- 10 GB delta partitioned

- 10 GB delta with no partitions

- 10 GB parquet partitioned

- 10 GB parquet with no partitions

- 1 TB delta partitioned

- 1 TB delta with no partitions

- 1 TB parquet partitioned

- 1 TB parquet with no partitions

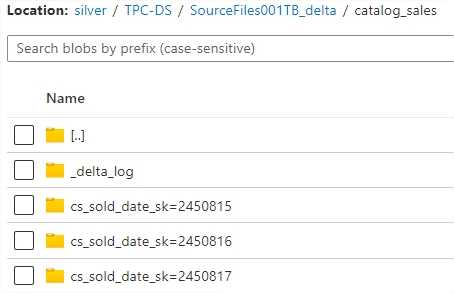

Define partitions

10 GB and 1 TB parititioned database transaction tables are partitioned as following:

- catalog_returns PARTITIONED BY cr_returned_date_sk

- web_returns PARTITIONED BY wr_returned_date_sk

- web_sales PARTITIONED BY ws_sold_date_sk

- store_returns PARTITIONED BY sr_returned_date_sk

- store_sales PARTITIONED BY ss_sold_date_sk

- inventory PARTITIONED BY inv_date_sk

- catalog_sales PARTITIONED BY cs_sold_date_sk

Spark-sql-perf library generated data uses ”HIVE_DEFAULT_PARTITION” for NULL value in partition names. Unfortunately, this value is not supported by Synapse partitions. Hence, I manually edit HIVE_DEFAULT_PARTITION to 0 in following tables: Synapse has issues with

- catalog_sales: cs_sold_date_sk=HIVE_DEFAULT_PARTITION to cs_sold_date_sk=0

- web_returns: wr_returned_date_sk=HIVE_DEFAULT_PARTITION to wr_returned_date_sk=0

- web_sales: ws_sold_date_sk=HIVE_DEFAULT_PARTITION to ws_sold_date_sk=0

- store_returns: sr_returned_date_sk=HIVE_DEFAULT_PARTITION to sr_returned_date_sk=0

- store_sales: ss_sold_date_sk=HIVE_DEFAULT_PARTITION to ss_sold_date_sk=0

Register databases and tables

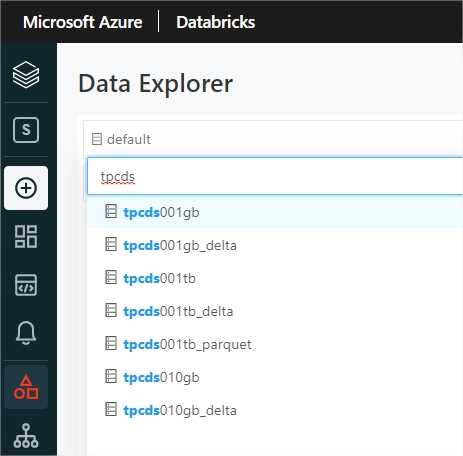

Databricks databases and tables

All databases are registered in Databricks metastore. Databases separate 1 GB, 10 GB 1TB datasets, delta from parquet table versions, partitioned data from non-partitioned.

Spark-sql-perf created tables on top of parquet automatically. I’ve moved the files in addition to silver and converted to delta.

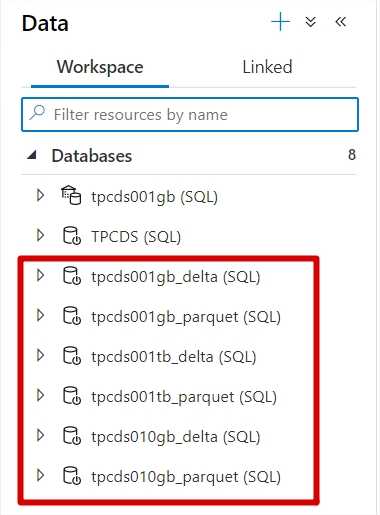

Synapse databases and tables

I explicitly define schema and use optimal data types and enforce partition usage with partitioned views.

View definition with partitions (example with DELTA)

CREATE VIEW dbo.inventoryASSELECT *FROM OPENROWSET(BULK 'TPC-DS/SourceFiles010GB_delta/inventory/',DATA_SOURCE = 'vmdatalake_silver',FORMAT = 'DELTA')WITH (inv_date_sk integer,inv_item_sk integer,inv_warehouse_sk integer,inv_quantity_on_hand integer) as a

Also, I decided to check parquet performance with OPENROWSET and EXTERNAL tables.

View definition without partitions (example with PARQUET)

CREATE VIEW dbo.inventoryASSELECT *, CAST(a.filepath(1) AS integer) AS [inv_date_sk]FROM OPENROWSET(BULK 'TPC-DS/SourceFiles010GB_parquet/inventory/inv_date_sk=*/',DATA_SOURCE = 'vmdatalake_bronze',FORMAT = 'PARQUET')WITH (inv_item_sk integer,inv_warehouse_sk integer,inv_quantity_on_hand integer) as a

Parquet as external table

CREATE EXTERNAL TABLE [dbo].[inventory](inv_date_sk integer,inv_item_sk integer,inv_warehouse_sk integer,inv_quantity_on_hand integer)WITH(LOCATION = 'TPC-DS/SourceFiles010GB_parquet/inventory/inv_date_sk=*/',DATA_SOURCE = vmdatalake_bronze,FILE_FORMAT = parquet_format)

Define queries

There are 90 analytical queries + 24 “warmup” queries (not included in duration calculations). 9 queries were removed as some were failing with Spark SQL (Syntax error or access violation / Query: AEValueSubQuery is not supported) and a few for Synapse. Hence, I left only succeeding queries for both platforms.

Configure testing environment with JMeter

JMeter is used often in such testing scenarios. I followed the steps here to set it up on my machine.

To connect to Databricks SQL, I used Databricks JDBC driver.

And for Synapse Serverless, I used Microsoft JDBC Driver for SQL Server

Define cluster sizes and pricing

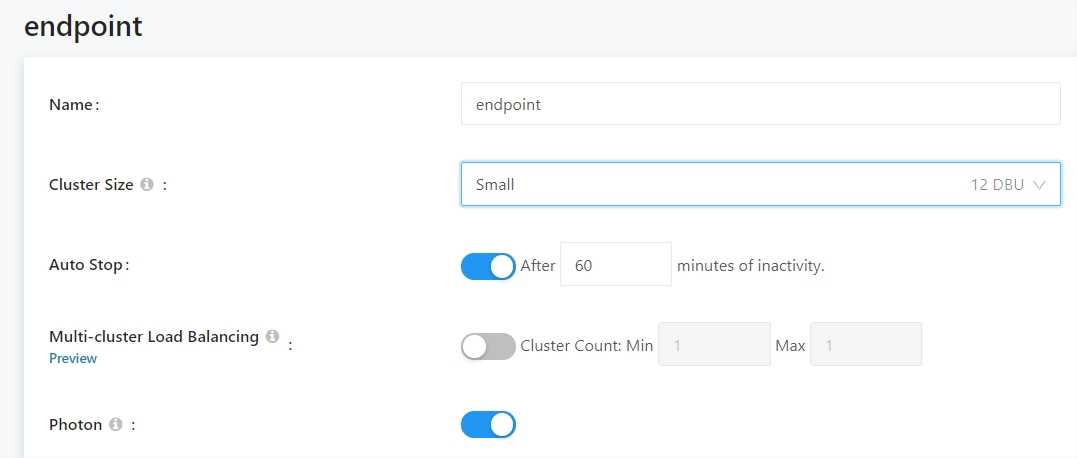

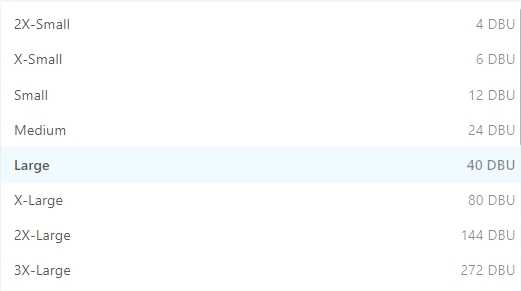

Databricks SQL Analytics endpoints

I don’t test concurrent queries, so I disable Multi-cluster Load Balancing

Azure Databricks bills you for virtual machines (VMs) provisioned in clusters and Databricks Units (DBUs) based on the VM instance selected.

Azure Databricks does not charge you until the cluster/endpoint is in a “Ready” state

- SQL Endpoint (compute) price - $0.22/DBU-hour

Total hourly price for SQL Endpoints:

2X-Small - 4 x $0.22/DBU-hour + 2 x $0.58/VM-hour (Standard_E8ds_v4)

X-Small - 6 x $0.22/DBU-hour + 3 x $0.58/VM-hour (Standard_E8ds_v4)

Small - 12 x $0.22/DBU-hour + 4 x $0.58/VM-hour (Standard_E8ds_v4) + 1 x $1.15/VM-hour (Standard_E16ds_v4)

Medium - 24 x $0.22/DBU-hour + 8 x $0.58/VM-hour (Standard_E8ds_v4) + 1 x $2.3/VM-hour (Standard_E32ds_v4)

Large - 40 x $0.22/DBU-hour + 16 x $0.58/VM-hour (Standard_E8ds_v4) + 1 x $2.3/VM-hour (Standard_E32ds_v4)

Disks, Blob storage, IP addresses are billed separately.

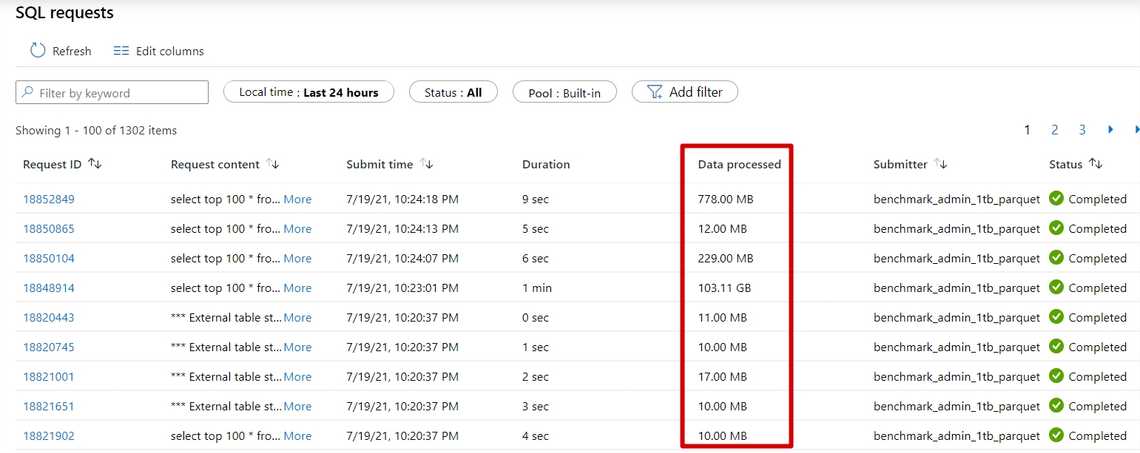

Azure Synapse Serverless

You only pay for executed queries and the pricing is based on the amount of data processed by each query. Metadata-only queries (DDL statements) do not incur a cost. Queries will incur a minimum charge of 10 MB and each query will be rounded up to the nearest 1 MB of data processed.

Price: $5 per TB of data processed

All the executed queries are visible in the monitoring tab. To be able to separate tests, I’ve used different SQL users (Submitter) to know which run processed data. It was not possible to filter by the serverless pool name.

Warmup queries are not included in consumption calculation nor in query execution time

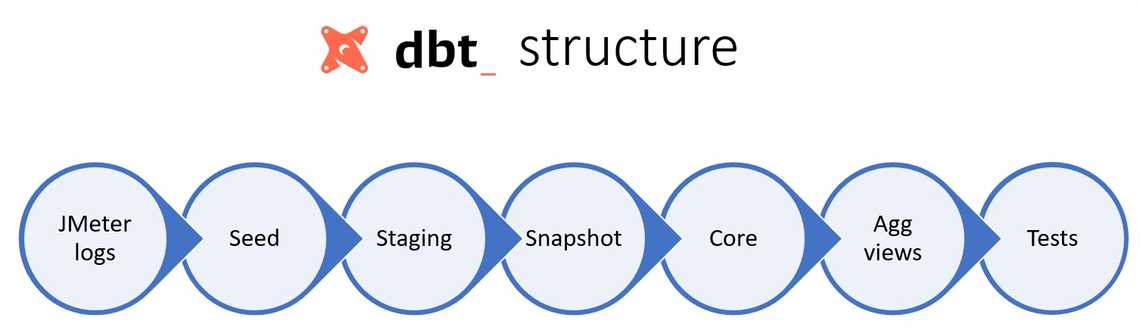

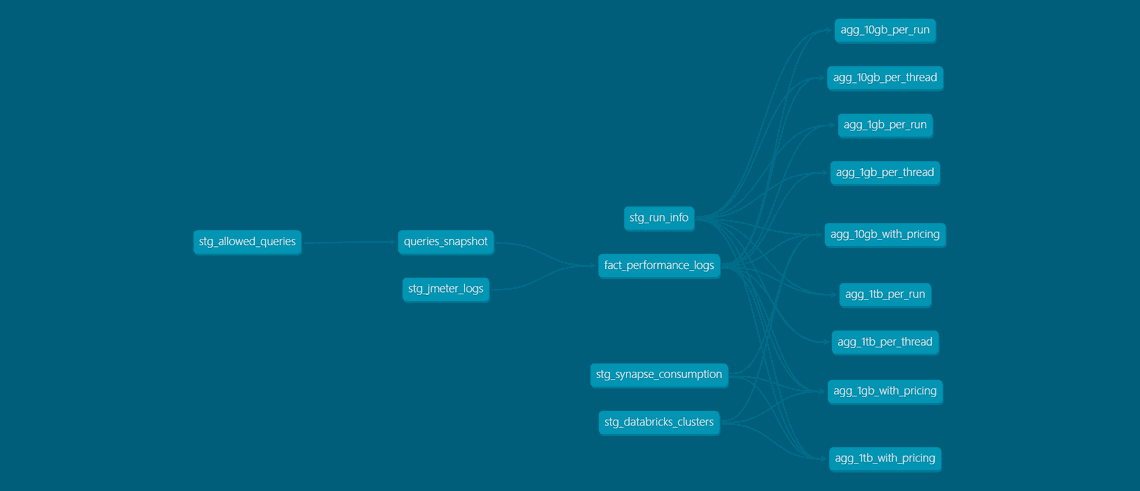

Execution log analysis

JMeter produces log files in CSV format. I use dbt (Data Build Tool), SQL Analytics as compute and PowerBI as visualization tool.

Dbt project is responsible for all log unification, aggregation logic, etc.

Hosted dbt docs contain more information about lineage, columns, etc.

Finally, I use PowerBI to create simple visualizations (fetches data from SQL Analytics).

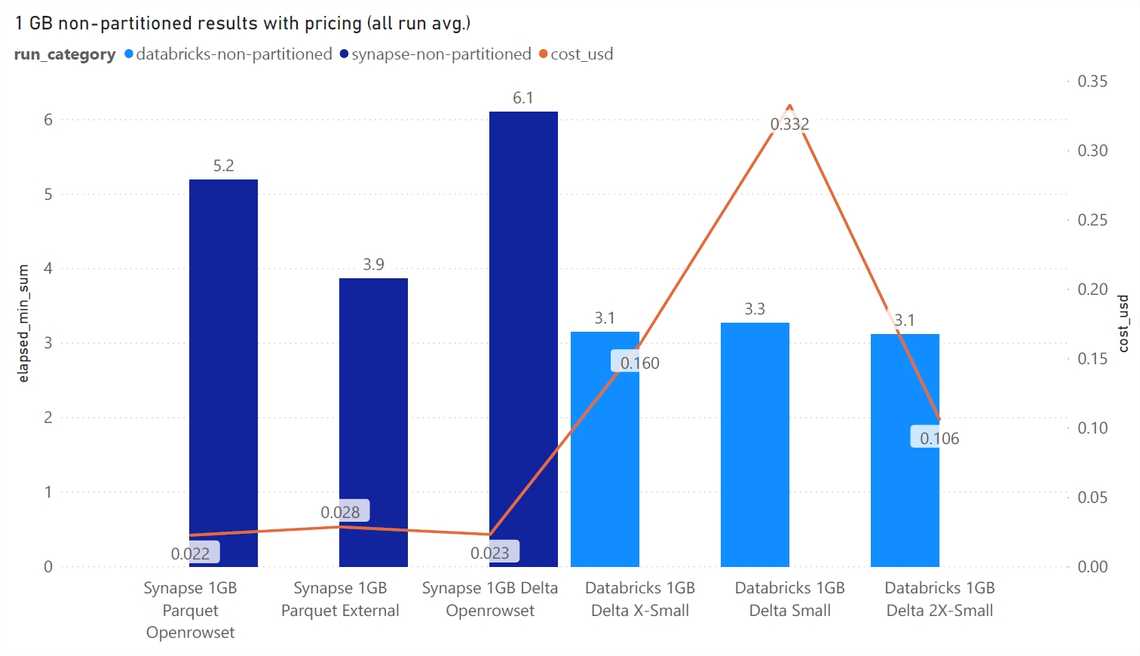

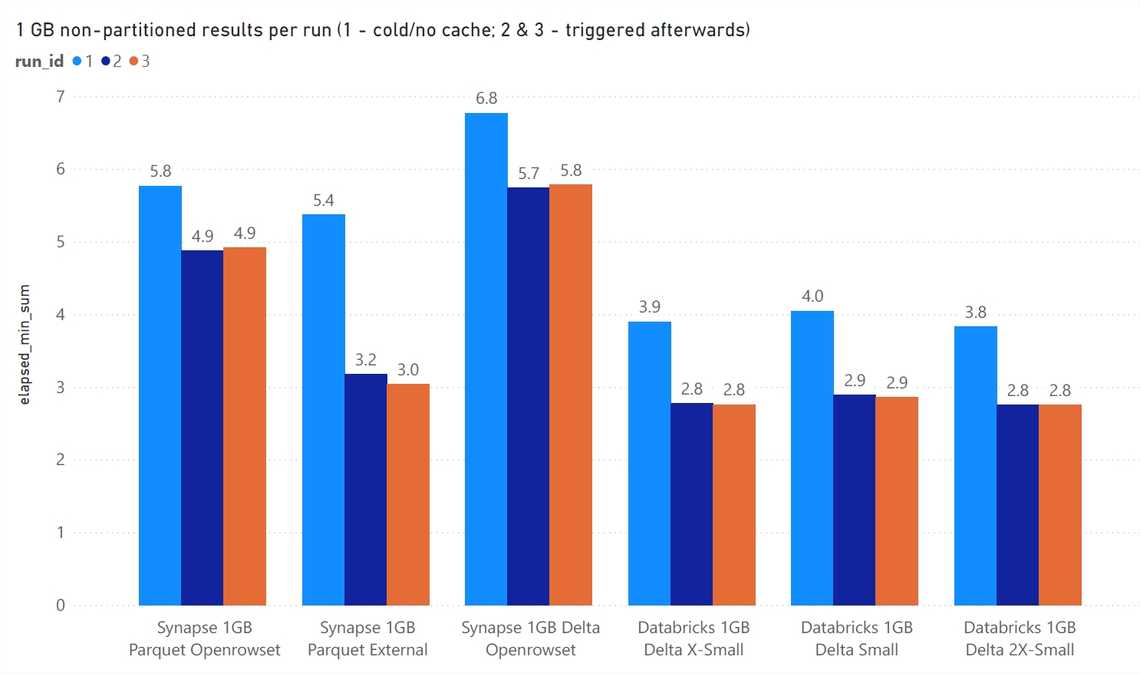

Round 1 - 1GB non-partitioned

Previous runs

1 GB non-partitioned results overview

- Synapse doesn’t have cache, provides rather stable results for all runs. While Databricks speeds up with cache for runs no. 2 and 3

- Synapse performs better with PARQUET than DELTA

- Databricks, as expected, performs better with DELTA

- Databricks is more expensive (not included minimal 10 mins inactivity shutdown).

Winner - Azure Synapse Serverless with external tables on parquet files. It provides consistet performance without the need to create/start clusters. Also, Synapse Serverless comes with access management and access APIs similar to SQL Server world.

Round 2 - 10 GB partitioned

10 GB partitioned results overview

- Databricks speeds up with cache for DELTA (no speed difference for PARQUET between the runs)

- Databricks runs ~2-3 faster on DELTA compared to PARQUET

- Synapse Serverless performs very poorly with large number of files.

- Even the least powerful Databricks cluster is almost 3 times faster than Serverless

- Synapse seems to be slightly faster with PARQUET over DELTA

Winner - Databricks SQL Analytics is a faster and cheaper alternative, and better with DELTA.

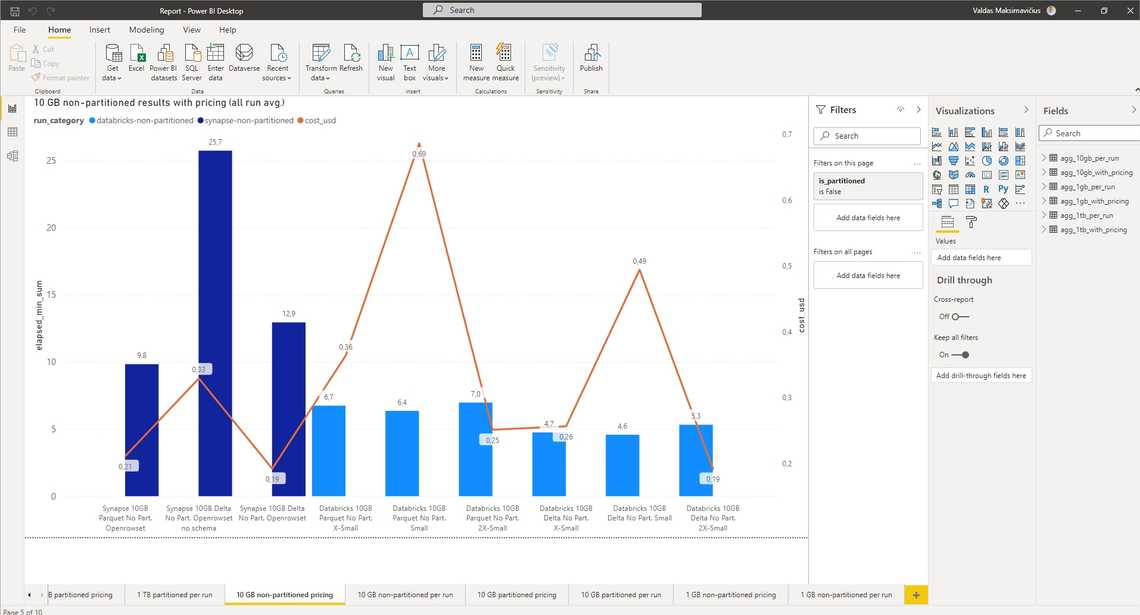

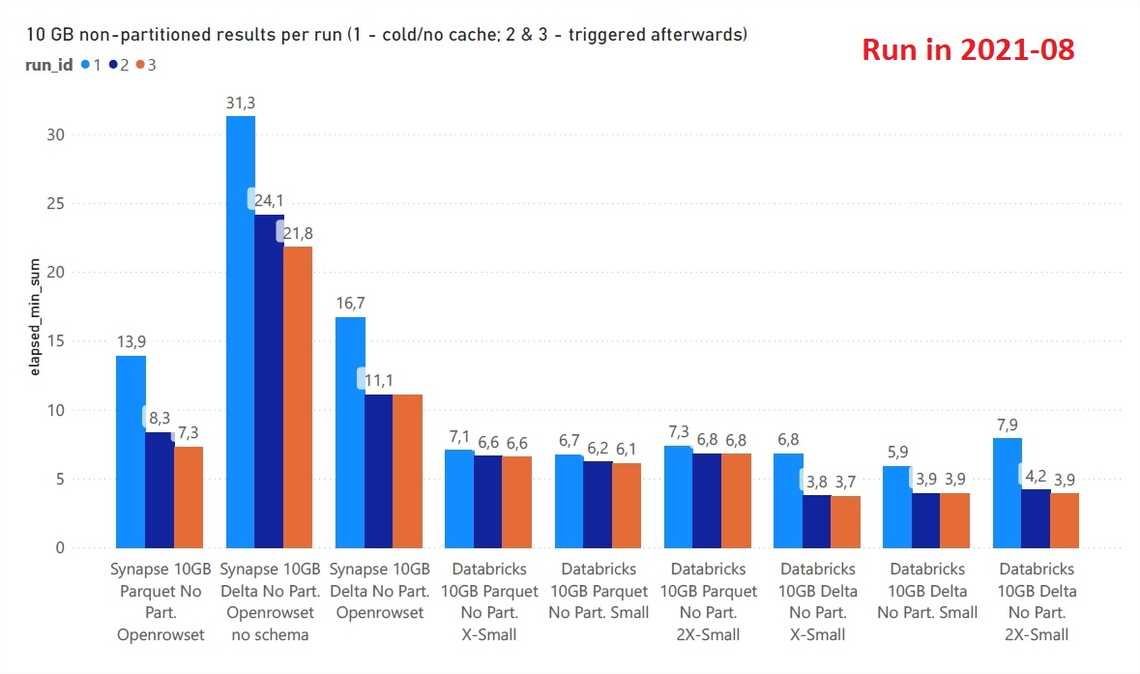

Round 3 - 10 GB non-partitioned

10 GB non-partitioned results overview

- Both Databricks and Synapse run faster with non-partitioned data. The difference is very big for Synapse.

- Synapse with defined columns and optimal types defined runs nearly 3 times faster

- Synapse Serverless cache only statistic, but it already gives great boost for 2nd and 3rd runs.

- Different Databricks clusters almost give the same results. How to decide what cluster to choose?

- Databricks speeds up with cache for DELTA (no difference for PARQUET)

- Synapse seems to be slightly faster with PARQUET over DELTA

Winner - The execution time considerably shorter compared to partitioned data, but still Databricks SQL Analytics is a faster for the same cost.

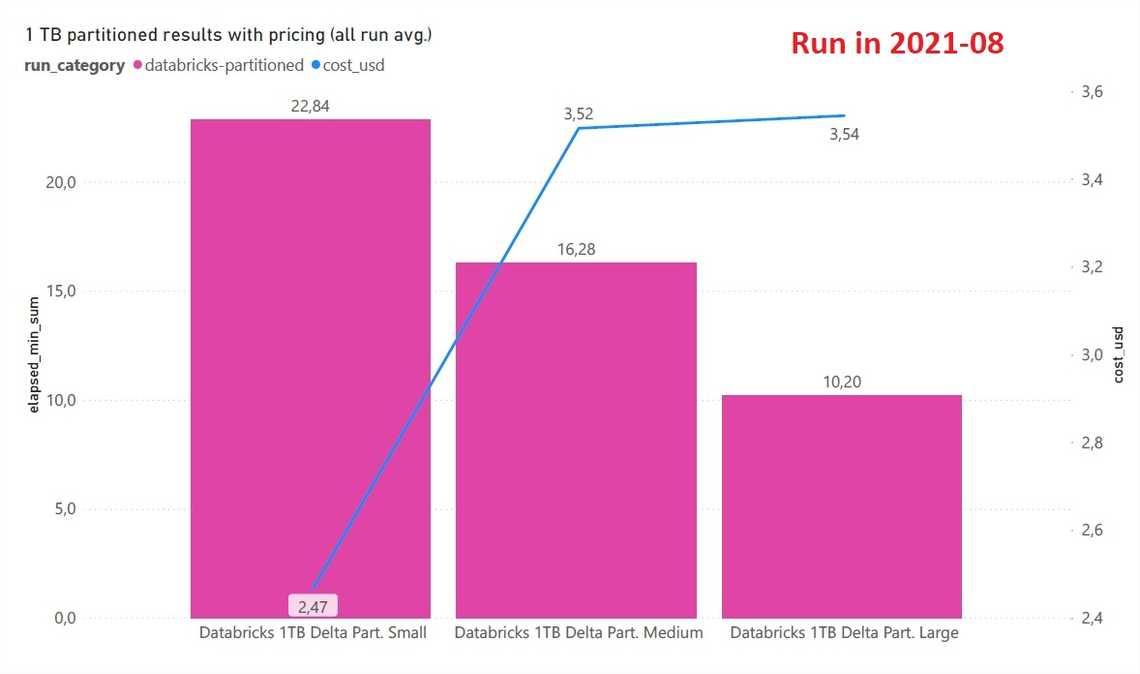

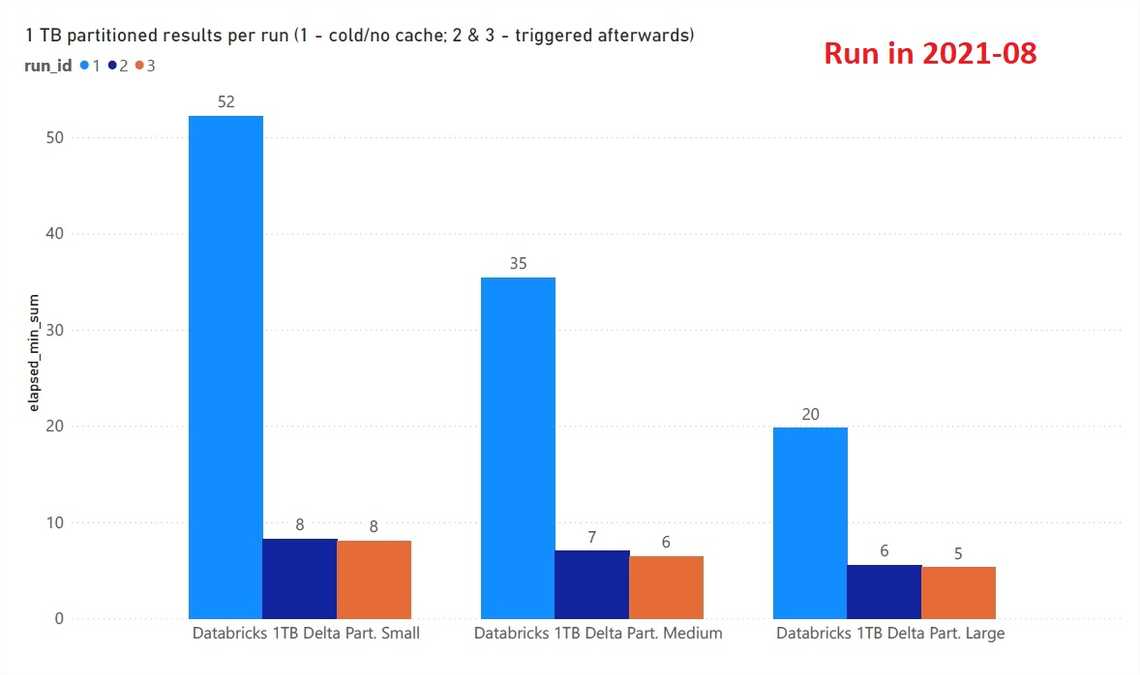

Round 4 - 1 TB partitioned

Synapse Serverless fails with big number of partitions and files for this data (both for PARQUET and DELTA). Various types of timeouts, exceptions, “There is insufficient system memory in resource pool ‘VDWFrontendPool’ to run this query” where popping up.

A few attempts to run Databricks on PARQUET with large cluster were canceled after hours of slow execution.

1 TB partitioned results overview

- It seems the underlying data has too many files, incorrect partition strategy. Synapse was unable to run with PARQUET and DELTA, Databricks struggled with PARQUET.

- Only Databricks on top of DELTA was capable of finishing the runs.

- Databricks cache makes huge difference

Winner - Databricks SQL Analytics on top of DELTA.

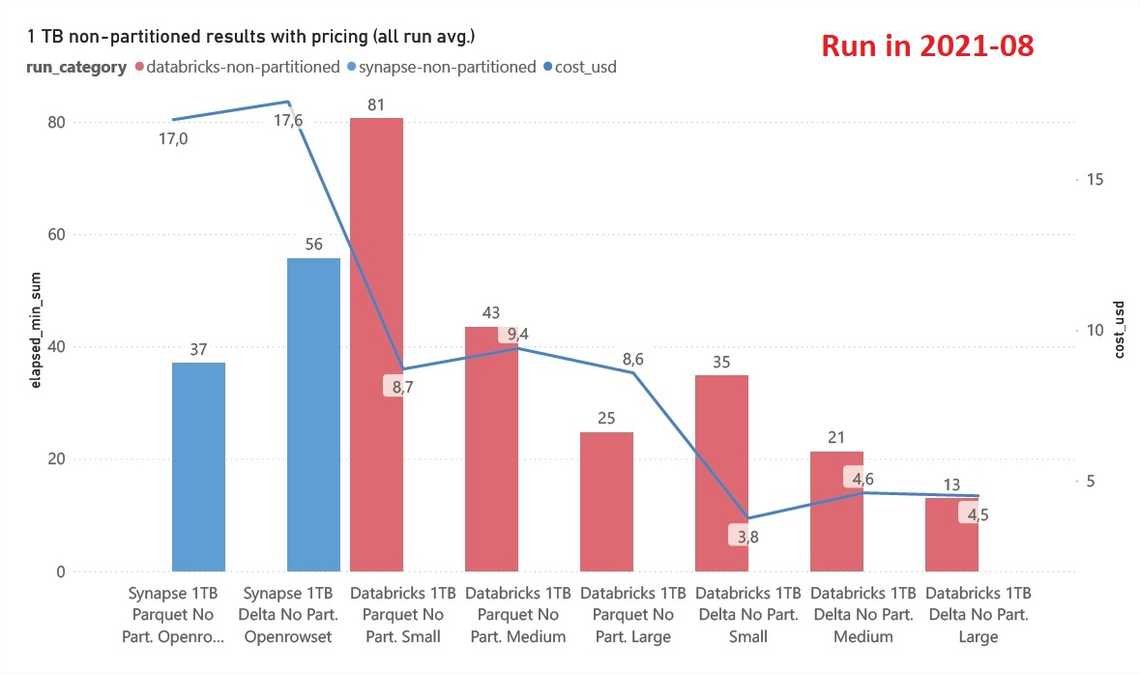

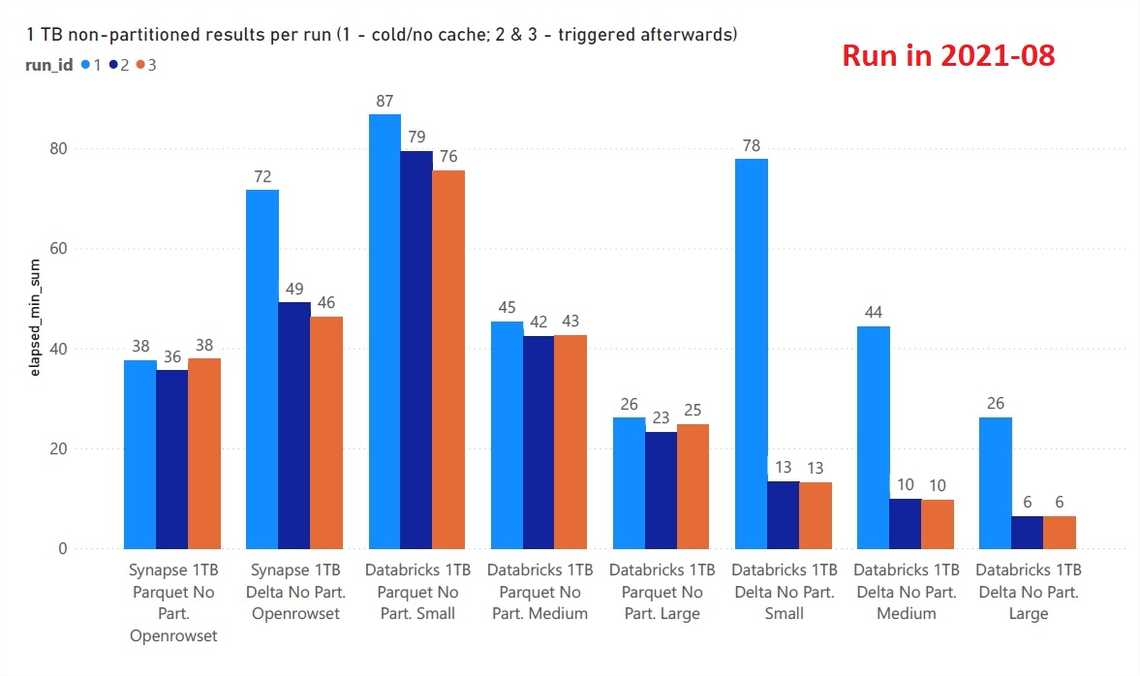

Round 5 - 1 TB non-partitioned

1 TB non-partitioned results overview

- Both Databricks and Synapse Serverless finished all queries

- Synapse provides consistent run times for PARQUET, sligtly faster than Databricks medium cluster on PARQUET

- As expected, larger Databricks clusters give better results (very obvious for non-cached runs)

- PARQUET runs are comparable for Synapse and Databricks

Winner - For PARQUET Synapse Serverless provides similar query times to Databricks, but at a slightly higher cost.

For best overall performance, choose DELTA and Databricks SQL Analytics.

Final observations

Databricks observations

Good

- Enterprise ready solution for various data sizes and different data types

- Developer-friendly approach to work with Delta tables from SQL Analytics portal.

- Cache, photon engine and “hidden” DELTA implementations give fast responses with all data sizes with DELTA format

- Well integrated with all Databricks components (notebooks, MLFlow, Feature Store, etc.)

Bad

- Requires endpoint (cluster) start-up/shut-down, not a serverless offering

- How to decide cluster size? A bigger cluster hasn’t always resulted in faster runs.

Synapse observations

Good

- Modern approach that doesn’t require any cluster startups. It’s there waiting for user’s queries.

- PARQUET performance with Synapse Pools might be even faster using new native technology (not tested)

- Well supported PARQUET, consistent run times

- Part of a bigger Synapse ecosystem solution to cater various data analytical needs

- Data readers can access Serverless using standard SQL Server libraries, with various types of enterprise authentication

Bad

- Limited DELTA support; even DELTA had no transactions, it was slower than the same data in PARQUET

- No data caching available, thought statistics improved the times for 2nd and 3rd runs

- Very sensitive to underlying data issues, performs badly with a high number of files

- Requires view definitions, optimal data sizes for best performance

- Ambiguous access setup with a few moving parts; “Content of directory on path cannot be listed”, “Cannot bulk load. The file does not exist or you don’t have file access rights”

Tags

Share

Related Posts

Other projects